Innovative rugged GPGPU-based technologies are driving AI solutions for the defense and aerospace industry

With the exponential growth in the number of data inputs within military and defense applications, having the ability to not only process, but build computing intelligence from that data is critical to mission success and overall human safety. The parallel processing capabilities in GPGPUs are redefining how rugged embedded systems manage and process multiple video and data streams simultaneously.

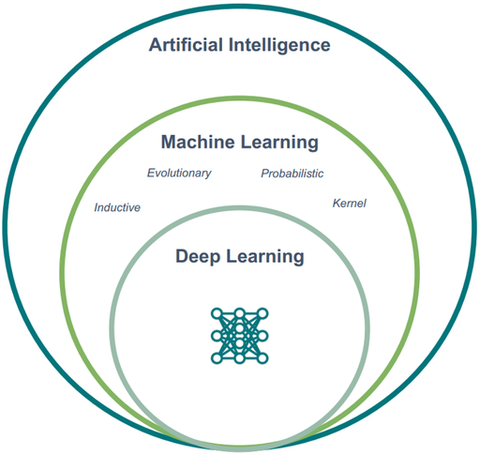

Enabled by GPGPU processing capabilities, deep learning is a subset of AI (Artificial Intelligence) and Machine Learning that uses multi-layered artificial neural networks to deliver state-of-the-art accuracy in critical intelligence areas including:

- Object detection

- Speech recognition

- Language translation

Computer systems mimic cognitive thinking (intelligence)

Machines learn without being explicitly programmed (logic)

Connections made between multiple data networks (inference)

Learn how to effectively use GPGPU’s powerful processing capabilities in your military and defense applications

This whitepaper that outlines the power consumption and computational challenges in CPU-based HPEC (high performance embedded computing) systems, then discusses how GPGPU technology can alleviate them.

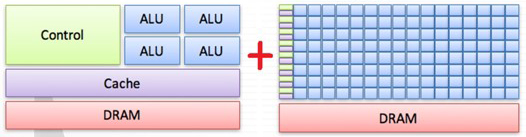

Instead of processing bottlenecks, data is distributed across hundreds of parallel CUDA cores, providing load balancing, lowering power consumption and increasing data processing.

Download Whitepaper

Limitations in CPU processing can mean data latency and unreliability.

CPUs use hundreds of serial cores that manage data in single streams

GPUs employ thousands of CUDA cores operating in parallel

Common Misconceptions

When starting to work with any new technology, there will be a degree of uncertainty. Below are some often-asked concerns of those starting to initiate GPGPU-based solutions. If you have any specific questions on how to best utilize GPGPU technologies, contact an expert, and see what else you can learn.

- GPUs are general purpose, so can’t really handle complex, high density computing tasks.

Because GPU cores allow applications to spread algorithms across many cores, they more easily architect and perform parallel processing. The ability to create many concurrent "kernels", each responsible for a subset of specific calculations, give GPUs the ability to perform complex, high density computing.

- Learning CUDA will take too much effort; I should stick with a programming language I already know.

CUDA is the de-facto parallel computing language, and part of the programming language curriculum in many universities. In addition to the large online forum NVIDIA offers, with many examples, web training classes, user communities, there are software companies ready to help with the first steps, as well. In fact, many algorithms have already been ported to CUDA, because of the CUDA-based solutions already deployed.

- Adding another processing engine will only increase system issues of integration complexity.

Once you build a CUDA algorithm, you can "reuse" it on any different platform supporting an NVIDIA GPGPU board. Porting it from one platform to another is easy, so the approach becomes less hardware-specific and, therefore, more "generic."

NEW: Xavier-based Development System

Using NVIDIA’s latest Jetson AGX Xavier technology, the new EV178 is an evaluation system that processes at up to 11 TFLOPS (Terra floating point operations per second) and 32 TOPS (Terra operations per second), while providing the best available performance per Watt in any GPGPU-based development platform.

The low-power, ultra-compact small form factor (SFF) of the EV178 gives embedded engineers a reliable platform to quickly develop artificial intelligence (AI) applications and port those solutions to the high-powered A178 Thunder, Aitech’s soon-to-be-released, NVIDIA Jetson Xavier-based, rugged, fanless AI GPGPU supercomputer.

Going to Embedded World February 22-25 in Nuremberg?

Stop by Stand 2-309 to talk GPGPU with industry experts.

Want to see more rugged GPGPU in action?

Check out our expanding line of rugged GPGPU-based boards and systems

Aitech’s rugged GPGPU product line offers the most advanced solutions for video and signal processing as well as accelerated deep-learning for the next generation of autonomous vehicles, surveillance and targeting systems, EW systems, and many other applications.

Have an industrial use for GPGPU processing technology?

Check out this white paper on how this parallel computing architecture can help in rugged industrial and commercial applications, too.

Download Whitepaper

Embedded World—February 22-25 Nuremberg

Going to Embedded World? Stop by Stand 2-309 to talk GPGPU with industry experts.