company insight sponsored by aitech

How is GPGPU Enabling Deep Learning in Rugged Embedded Applications?

Artificial intelligence (AI) is empowering embedded systems globally, providing ‘rational’ computer-based knowledge using data input. A subset of AI, Deep Learning is modeled after the brain’s neural networks and takes AI further by making connections between multiple data networks. GPGPU technology is instrumental in managing the increased computational demands generated by this new paradigm of embedded processing.

Ut sed consequat felis. Nunc viverra mattis vulputate. Etiam bibendum metus nunc, ac hendrerit nisi lacinia id. Duis a purus sed ex laoreet dapibus non accumsan sem. Cras laoreet ut eros a interdum. Vestibulum dolor lorem, feugiat elementum gravida nec, mattis vitae ante. Duis non arcu congue lorem sodales vulputate eu et massa. Maecenas tincidunt pretium ipsum, porta commodo felis. Praesent vitae ex eu dui fringilla porta non vitae nibh. Suspendisse ac est id mi ultrices semper. Vivamus ac nulla ut dolor maximus molestie ut eu diam. Vestibulum non fringilla leo.

Aenean ultricies tellus sit amet ex egestas blandit vel et ex. Nulla facilisi. Curabitur condimentum orci in nulla placerat convallis. Donec augue ipsum, venenatis non malesuada nec, placerat ac velit. Maecenas efficitur aliquam placerat. Ut tempor quam leo. Aenean vitae pharetra risus. Nunc consectetur mattis molestie. Ut ac congue orci.

Increasing System Complexity Brings New Challenges

The software applications used by embedded systems in consumer and military applications have become far more complex, putting extreme computation demands on the hardware itself.

System architects, product managers and engineers are finding that traditional CPU-based systems can’t keep pace with the growing amount of data streams within a given system or the processing requirements to manage the data. In addition to the influx of data sources, other culprits to system complexity include continued technology upgrades, shrinking system size and increasing densities within a system itself.

Two of the major difficulties systems engineers are facing as embedded computing continues to evolve can be summed up in a few words: loss of calculation power and increased power consumption.

Managing Higher Computation Needs

In response, High Performance Embedded Computer (HPEC) systems are utilizing the specialized parallel computational speed and performance of General Purpose Graphic Processor Units (GPGPUs), enabling system designers to bring exceptional power and performance into rugged, extended temperature small form factors (SFFs). This is making the application of artificial intelligence, and its subset of deep learning, a more useful tool in embedded systems.

GPGPU enables embedded systems to compute high volumes of data simultaneously, while enabling inferenced-based logic (deep learning).

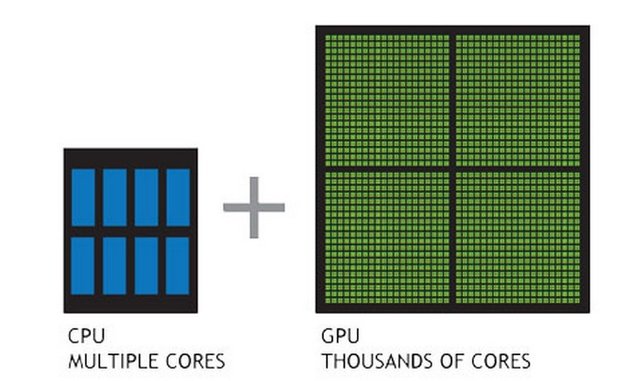

GPU-accelerated computing combines a graphics processing unit (GPU) together with a central processing unit (CPU) to accelerate applications, and offload some of the computation-intensive portions from the CPU to the GPU.

Originating in the gaming industry, where graphics and data processing traditionally set new limits, GPGPU processors are now serving as the heart of a new wave of rugged embedded systems. These new supercomputers can meet the calculation demands required in compute-intensive applications using GPGPU’s high power-to-performance ratio, yet are packed in an ultra-compact footprint.

Enabling More Calculation Power

As processing requirements and data inputs continue to increase, the main computing engine—the CPU—will eventually choke. However, the GPU has evolved into an extremely flexible and powerful processor that can handle certain computing tasks better, and faster, than the CPU, thanks to improved programmability, precision and parallel processing capabilities.

While trying to reuse existing software applications, we are constantly adding new features and implementing new requirements. The code then becomes increasingly complex, the application become CPU “hungry” and eventually, you are faced with:

- Complex CPU Load Balancing – we are walking a fine line between optimum load or overload, in order to satisfy our SW application demands

- CPU Choking – eventually we end up with an extremely slow response from the operating system, resulting in SW architecture changes that need to balance between an acceptable response time and getting the job done.

- Upgrading and Overclocking – Other methods for increasing computation power in an embedded system can be costly upgrading, or overclocking) which is detrimental to component life, while still not providing optimal performance.

Using a GPU instead of a CPU reduces development time and “squeezes” maximum performance per watt from the computation engine.

GPU accelerating computing

As stated, GPU-accelerated computing is the use of a graphics processing unit (GPU) together with a central processing unit (CPU) to accelerate applications. By offloading some of compute-intensive portion of the application to the GPU, the rest of the application remains to run on the CPU, freeing up system resources and managing the processing load and heat much better.

So, how does the GPU operate faster than the CPU?

The GPU has evolved into an extremely flexible and powerful processor because of:

- Programmability

- Precision (Floating Point)

- Performance - thousands of cores to process parallel workloads

- Increased speed, thanks to the demands from the giant gaming industry

NVIDIA® offers a concise explanation:

A simple way to understand the difference between a CPU and GPU is to compare how they process tasks. A CPU consists of a few cores optimized for sequential serial processing, while a GPU has a massively parallel architecture consisting of thousands of smaller, more efficient cores designed for handling multiple tasks simultaneously.

The parallel computing architecture of GPGPU can manage vast amount of data streams at once, far surpassing what a CPU can traditionally handle.

Unprecedented Data Processing

NVIDIA’s latest GPGPU technology is the Jetson AGX Xavier SoM, which is the heart of Aitech’s A178, a soon-to-be released rugged, Small Form Factor (SFF) GPGPU-based AI supercomputer that will extend the company’s full line of powerful, compact GPGPU-based products. All GPGPU-based platforms are suited for distributed systems in a number of military and defense environments, especially for video and signal processing in the next generation of autonomous vehicles, surveillance and targeting systems and EW applications.

Numerous Implementations

There are so many applications that benefit from GPGPU technology; in fact, any application involved with mathematical calculation can be a very good candidate for this technology. These can include:

- Image Processing – threat detection, vehicle detection, missile guidance, obstacle detection, etc.

- Radar

- Sonar

- Video Encoding and Decoding (NTSC/PAL to H.264)

- Data Encryption/Decryption

- Database Queries

- Motion Detection

- Video Stabilization

In essence, GPGPUs have elevated embedded systems to provide better artificial intelligence through deep learning across many industries by reliably managing higher data throughput and balancing system processing for more efficient computing operations.